GKE上架設SolrCloud

正文

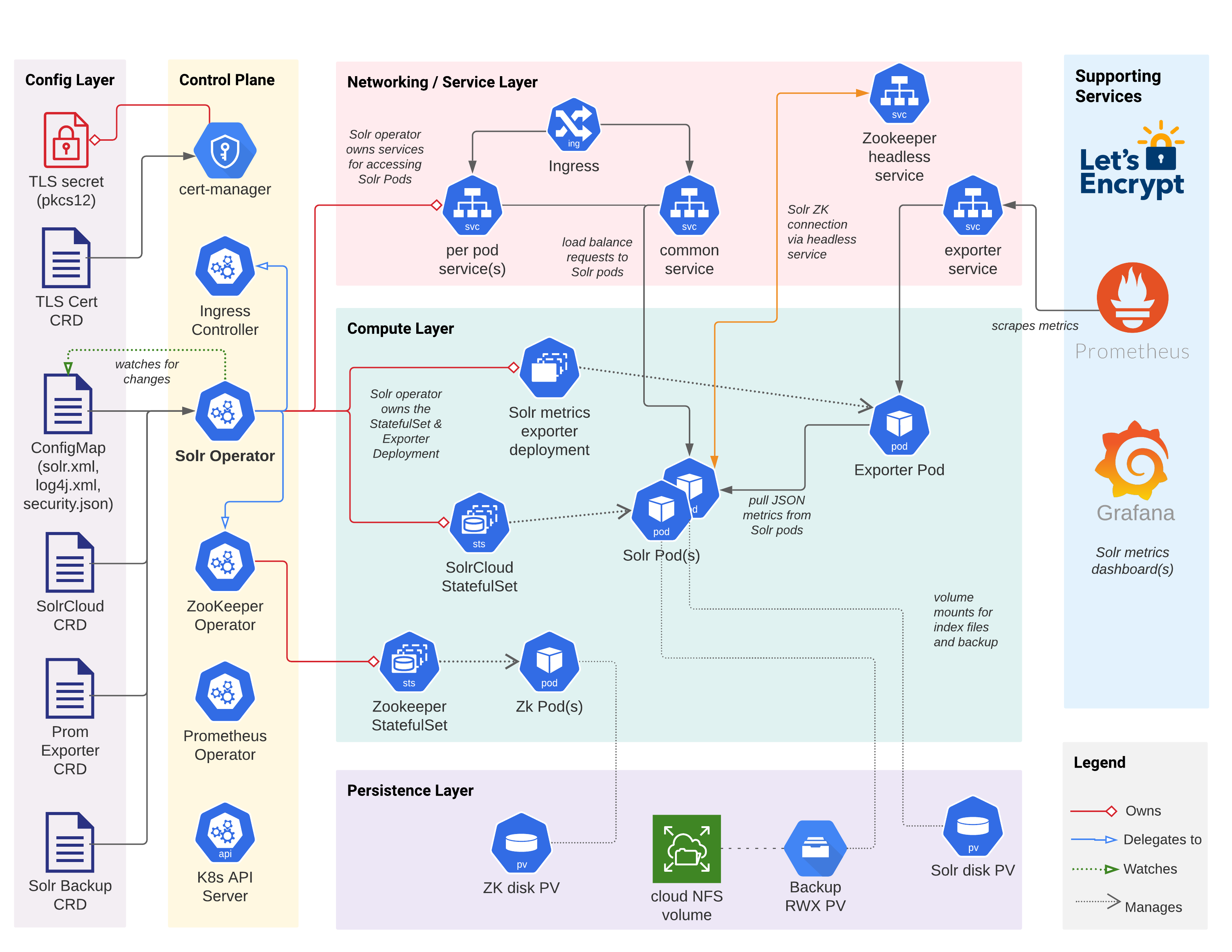

Solr的架構

ref. 淺談Solr叢集架構

完整架構

SolrCloud是基於 Solr 與 blog.36.分佈式系統-Zookeeper搜尋方案。

特色功能有

- 集中式的配置資訊

- 自動容錯

- 近實時搜尋

- 查詢時自動負載平衡

- 自動分發

- 日誌跟蹤

solrCloud的架構,可以看看以下幾篇。

ref.

這邊直接開始安裝吧。

首先確定你的電腦有helm ,我試著找有沒有單純可以用 kubectl apply的方式安裝,但沒找到。所以,用helm吧。

ref.solr-operator

- 先增加solr的倉庫

helm repo add apache-solr https://solr.apache.org/charts

helm repo update

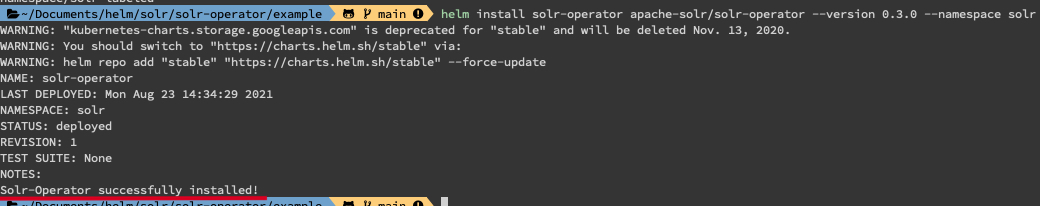

- 安裝 solr-operator,指定安裝在 solr的 namespace,避免istio的istio-proxy安裝進去

kubectl create -f https://solr.apache.org/operator/downloads/crds/v0.3.0/all-with-dependencies.yaml

helm install solr-operator apache-solr/solr-operator \

--version 0.3.0 --namespace solr

operator 安裝完成 (fig.1)

(fig.1)

3. solrCloud.yaml

apiVersion: solr.apache.org/v1beta1

kind: SolrCloud

metadata:

name: video

namespace: solr

spec:

customSolrKubeOptions:

podOptions:

resources:

limits:

memory: 3Gi

requests:

cpu: 700m

memory: 3Gi

dataStorage:

persistent:

pvcTemplate:

spec:

resources:

requests:

storage: 2Gi

reclaimPolicy: Delete

replicas: 3

solrImage:

repository: solr

tag: 8.8.2

solrJavaMem: -Xms500M -Xmx500M

updateStrategy:

method: StatefulSet

zookeeperRef:

provided:

chroot: /explore

image:

pullPolicy: IfNotPresent

repository: pravega/zookeeper

tag: 0.2.9

persistence:

reclaimPolicy: Delete

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

replicas: 3

zookeeperPodPolicy:

resources:

limits:

memory: 500Mi

requests:

cpu: 250m

memory: 500Mi

- 佈署 solrCloud.yaml,這邊的系統資源要求頗高,資源不夠請多開一些cpu 或 memory。

kubectl apply -f solrCloud.yaml

- 由於我是直接佈署在istio上面,所以開啟網頁的話,我是直接新增 virtualService的設定。

kind: VirtualService

metadata:

name: istio-virtualservice-tools

namespace: tools

spec:

hosts:

- "*"

gateways:

- istio-gateway-tools.istio-system.svc.cluster.local

http:

- match:

- uri:

exact: /

- uri:

prefix: /solr

name: solr

route:

- destination:

host: video-solrcloud-common.solr.svc.cluster.local

port:

number: 80

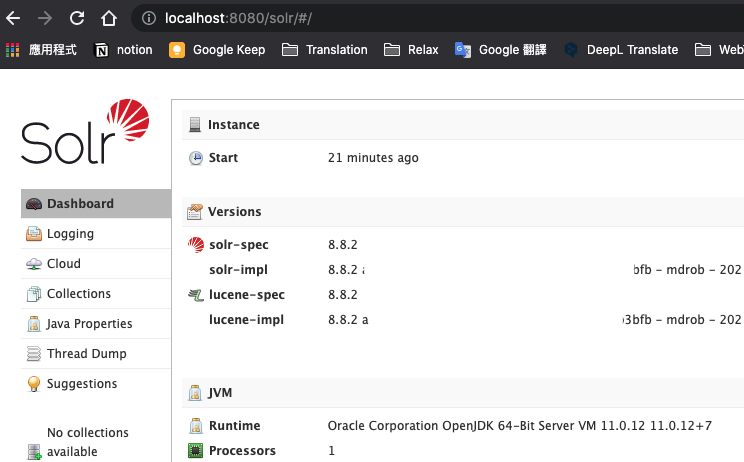

也可以直接在本機上使用port-forward轉發開啟網頁。如以下指令,開啟 網頁 http://localhost:8080 (fig.2)

kubectl port-forward service/video-solrcloud-common -n solr 8080:80

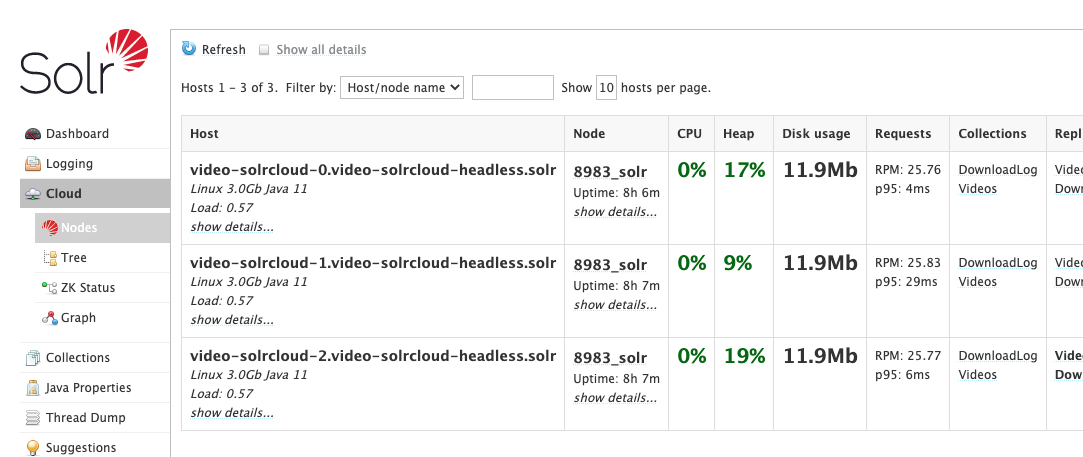

監控 solrCloud

本來的solrCloud舊有簡易的監控畫面了,

這部分是看記憶體的使用量。

但可有發現資料並不是非常得多。

之前在GKE的叢集上已經有架設prometheus了,

所以這次也要將資料丟進去prometheus裡面。

這邊要先export solr的metric,

官方 github上面有建議的方法 ,但需安裝 prometheus operator。如果沒有安裝,prometheus 在探索metrics時,是找不到這個exporter的。

ref.Deploy Prometheus Exporter for Solr Metrics,

apiVersion: solr.apache.org/v1beta1

kind: SolrPrometheusExporter

metadata:

labels:

controller-tools.k8s.io: "1.0"

name: explore-prom-exporter

namespace: solr

spec:

customKubeOptions:

podOptions:

resources:

requests:

cpu: 300m

memory: 800Mi

solrReference:

cloud:

name: "video"

numThreads: 6

image:

repository: solr

tag: 8.8.2

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: solr-metrics

labels:

release: prometheus-stack

spec:

selector:

matchLabels:

solr-prometheus-exporter: explore-prom-exporter

namespaceSelector:

matchNames:

- solr

endpoints:

- port: solr-metrics

interval: 15s

因為我不想裝 prometheus operator,所以複製上面安裝的deploy yaml,自己產生一個solr exporter 的deploy跟 service給 prometheus抓資料。

或許有人會問,為什麼不利用上面的部屬完後去修改service.yaml把

prometheus.io/port: "80"

改成

prometheus.io/port: "8080"

就好了。

因為,改了後他會一直還原成舊的狀態。

這應該是 solrOperator的關係,會一直覆蓋手動修改的設定,因為我也找不到去修改增加solrOperator的設定,讓80改成8080,所以應該透過上面的 ServiceMonitor 來讓prometheus可以取得資料。

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: solr

name: explore-prom-exporter

annotations:

deployment.kubernetes.io/revision: "1"

# generation: 1

labels:

controller-tools.k8s.io: "1.0"

solr-prometheus-exporter: explore-prom-exporter

technology: solr-prometheus-exporter

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

solr-prometheus-exporter: explore-prom-exporter

technology: solr-prometheus-exporter

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

controller-tools.k8s.io: "1.0"

solr-prometheus-exporter: explore-prom-exporter

technology: solr-prometheus-exporter

spec:

containers:

- args:

- -p

- "8080"

- -n

- "6"

- -z

- video-solrcloud-zookeeper-0.video-solrcloud-zookeeper-headless.solr.svc.cluster.local:2181,video-solrcloud-zookeeper-1.video-solrcloud-zookeeper-headless.solr.svc.cluster.local:2181,video-solrcloud-zookeeper-2.video-solrcloud-zookeeper-headless.solr.svc.cluster.local:2181/explore

- -f

- /opt/solr/contrib/prometheus-exporter/conf/solr-exporter-config.xml

command:

- /opt/solr/contrib/prometheus-exporter/bin/solr-exporter

image: solr:8.8.2

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /metrics

port: 8080

scheme: HTTP

initialDelaySeconds: 20

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: solr-prometheus-exporter

ports:

- containerPort: 8080

name: solr-metrics

protocol: TCP

resources:

requests:

cpu: 300m

memory: 800Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext:

fsGroup: 8080

terminationGracePeriodSeconds: 10

---

apiVersion: v1

kind: Service

metadata:

namespace: solr

name: explore-prom-exporter

annotations:

prometheus.io/path: /metrics

prometheus.io/port: "8080"

prometheus.io/scheme: http

prometheus.io/scrape: "true"

creationTimestamp: "2021-09-03T05:52:23Z"

labels:

controller-tools.k8s.io: "1.0"

service-type: metrics

solr-prometheus-exporter: explore-prom-exporter

spec:

ports:

- name: solr-metrics

port: 80

protocol: TCP

targetPort: 8080

selector:

solr-prometheus-exporter: explore-prom-exporter

technology: solr-prometheus-exporter

sessionAffinity: None

type: ClusterIP

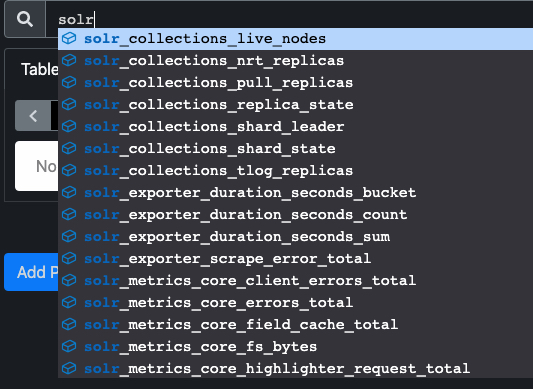

最後到prometheus看一下有沒有關於solr的資料(fig.4)

(fig.4)

有資料了,但我們不知道要拿哪些metrics來作爲監控的資料,就先去grafana dashboard找找吧。

Solr Dashboard

下載,匯入grafana收工。

結尾

再來就是,實際操作 39.solrCloud的初體驗了

額外參考,雖然此連結已經不適用,但基本的安裝方式是可以參考。

Running Solr on Kubernetes